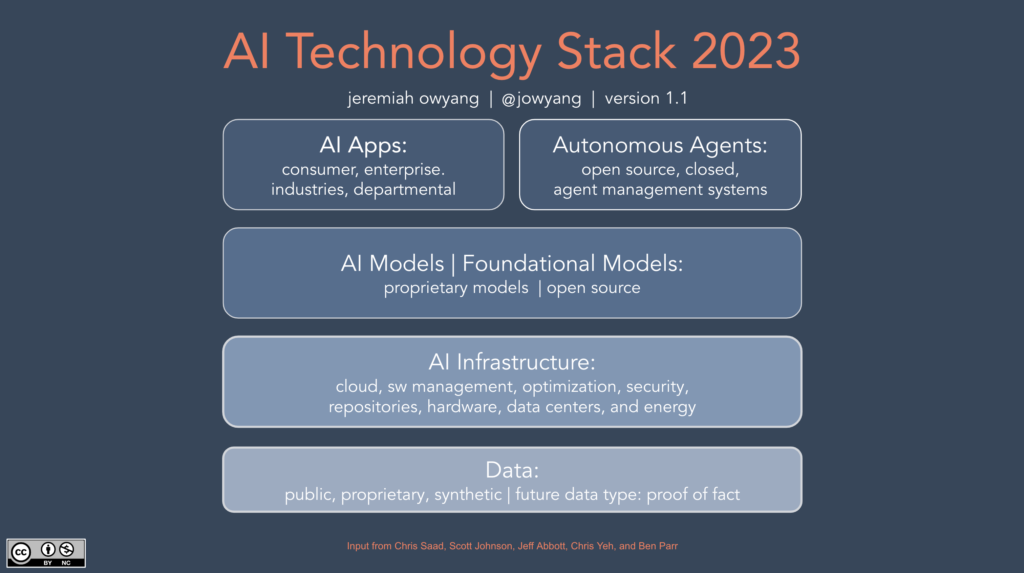

The “AI Technology Stack” is a comprehensive framework that encapsulates the diverse aspects of AI implementation, from data acquisition to the deployment of AI-based solutions. It illustrates the complex, interlocking elements that combine to form robust AI systems. Each layer of the stack serves a distinct purpose and operates interdependently, demonstrating how AI technologies function from the ground up.

The AI Tech Stack

Bottom to top, as we start with foundations

- Data Layer: The foundation of any AI system is data. It acts as the raw material that the system learns from and makes decisions upon. There are a few types of data sources: public, proprietary (80% of world’s data is behind a firewall) and a new type of synthetic data created by AI which I wanted to call out as something unique. A future data type, “proof of fact,” might guarantee the authenticity and veracity of a data point, enhancing the reliability of AI systems, I expect to see a new protocol that points to facts (likely historical, dates, weather, to start with). Example: Businesses may use public data, such as social media trends, to inform their marketing strategies. Alternatively, a tech company might rely on proprietary data from user interactions to refine their products or services, while using synthetic data to test new features.

- AI Infrastructure Layer: This layer involves the technological backbone that supports AI operations. It includes cloud storage, software management, optimization algorithms, security measures, repositories for storing data, hardware components, data centers, and energy management. A crucial aspect of this layer is MLOps, which concerns the processes and practices of managing AI models’ lifecycle. Example: A fintech startup might leverage cloud infrastructure to host and process its user data. Simultaneously, they would implement robust security measures and use optimization algorithms to ensure efficient data analysis. MLOps would be crucial in managing the lifecycle of their AI models for credit risk assessment.

- AI Models | Foundational Models: At this level, algorithms and models that process and learn from data are built. They can be either proprietary models (OpenAI, Bard, Amazon Titan, Inflection) or open-source ones (see leaderboard on hugging face repository) . Proprietary AI models are developed in-house and offer competitive advantages, while open-source models are publicly available and can be customized for various uses. Example: An e-commerce platform might develop proprietary recommendation models based on its customers’ shopping behaviors. Conversely, a public corporation ute might leverage open-source models for predicting supply chain changes, with private proprietary data on-premise, customizing them to suit their unique needs.

- AI Apps: AI applications bring AI’s benefits to end-users. They come in a variety of forms, like consumer apps, enterprise solutions, or industry-specific tools. The majority of AI projects to date fall into this category, and these apps bring the power of AI directly to the hands of users or businesses, see “There’s an AI for that“. Example: A healthcare provider could use an AI app that predicts disease risk based on patient data. An individual user might enjoy a music streaming service that uses AI to suggest songs based on their listening habits.

- Autonomous Agents: The top layer represents an emerging category – autonomous agents. These are systems that operate independently to achieve specific goals, using AI to make decisions. These can be either open-source or proprietary and require specialized management systems. Example: A logistics company might deploy autonomous agents for managing warehouse operations, improving efficiency. On the consumer end, personal virtual assistants that help with scheduling and reminders can be seen as autonomous agents, simplifying daily life tasks.

Understanding each layer of the AI technology stack helps appreciate the complexity and potential of AI. As we continue to innovate, each layer will evolve, driving new applications and advancements in artificial intelligence. Input from Chris Saad, Scott Johnson, Jeff Abbott, Chris Yeh, Ben Parr and Mark Birch.